1. Check Software Requirement

| Software | Comment | Version | Download Link |

|---|---|---|---|

| Git | Fetch branch name and hash of latest commit | latest | https://git-scm.com/book/en/v2/Getting-Started-Installing-Git |

| Apache Maven | Build Java and Scala source code | 3.8.2 or latest | https://maven.apache.org/download.cgi |

| Node.js | Build front end | 12.14.0 is recommended ( or 12.x ~ 14.x) | How to switch to older node.js |

| JDK | Java Compiler and Development Tools | JDK 1.8.x | https://www.oracle.com/java/technologies/javase/javase8u211-later-archive-downloads.html |

After installing the above software, please verify software requirements by following commands:

$ java -version

$ mvn -v

$ node -v

$ git versionOptions for Packaging Script

| Option | Comment |

|---|---|

| -official | If adding this option, the package name won’t contain the timestamp |

| -noThirdParty | If adding this option, third-party binary won’t be packaged into binary, current they are influxdb,grafana and PostgreSQL |

| -noSpark | If adding this option, spark won’t be packaged into the Kylin binary |

| -noHive1 | By default Kylin 5.0 will support Hive 1.2, if add this option, this binary will support Hive 2.3+ |

| -skipFront | If add this option, the front-end won’t be built and packaged |

| -skipCompile | Add this option will assume java source code no need to be compiled again |

Other Options for Packaging Script

| Option | Comment |

|---|---|

| -P hadoop3 | Packaging a Kylin 5.0 software package for running on Hadoop 3.0 + platform. |

Package Content

| Option | Comment |

|---|---|

| VERSION | Apache Kylin ${release_version} |

| commit_SHA1 | ${HASH_COMMIT}@${BRANCH_NAME} |

Package Name convention

Package name is apache-kylin-${release_version}.tar.gz, while ${release_version} is {project.version}.YYYYmmDDHHMMSS by default. For example, an unofficial package could be apache-kylin-5.0.0-SNAPSHOT.20220812161045.tar.gz while an official package could be apache-kylin-5.0.0.tar.gz

Example for developer and release manager

## Case 1: For the developer who wants to package for testing purposes

./build/release/release.sh

## Case 2: Official apache release, Kylin binary for deployment on Hadoop3+ and Hive2.3+,

# and the third party cannot be distributed because of apache distribution policy(size and license)

./build/release/release.sh -noSpark -official

## Case 3: A package for Apache Hadoop 3 platform

./build/release/release.sh -P hadoop32. Build source code

Clone

git clone https://github.com/apache/kylin.git cd kylin git checkout kylin5

Build backend source code before your start debugging.

mvn clean install -DskipTestsBuild front-end source code.

(Please use node.js v12.14.0, for how to use a specific version of node.js, please check how to switch to a specific node js )

cd kystudio npm install

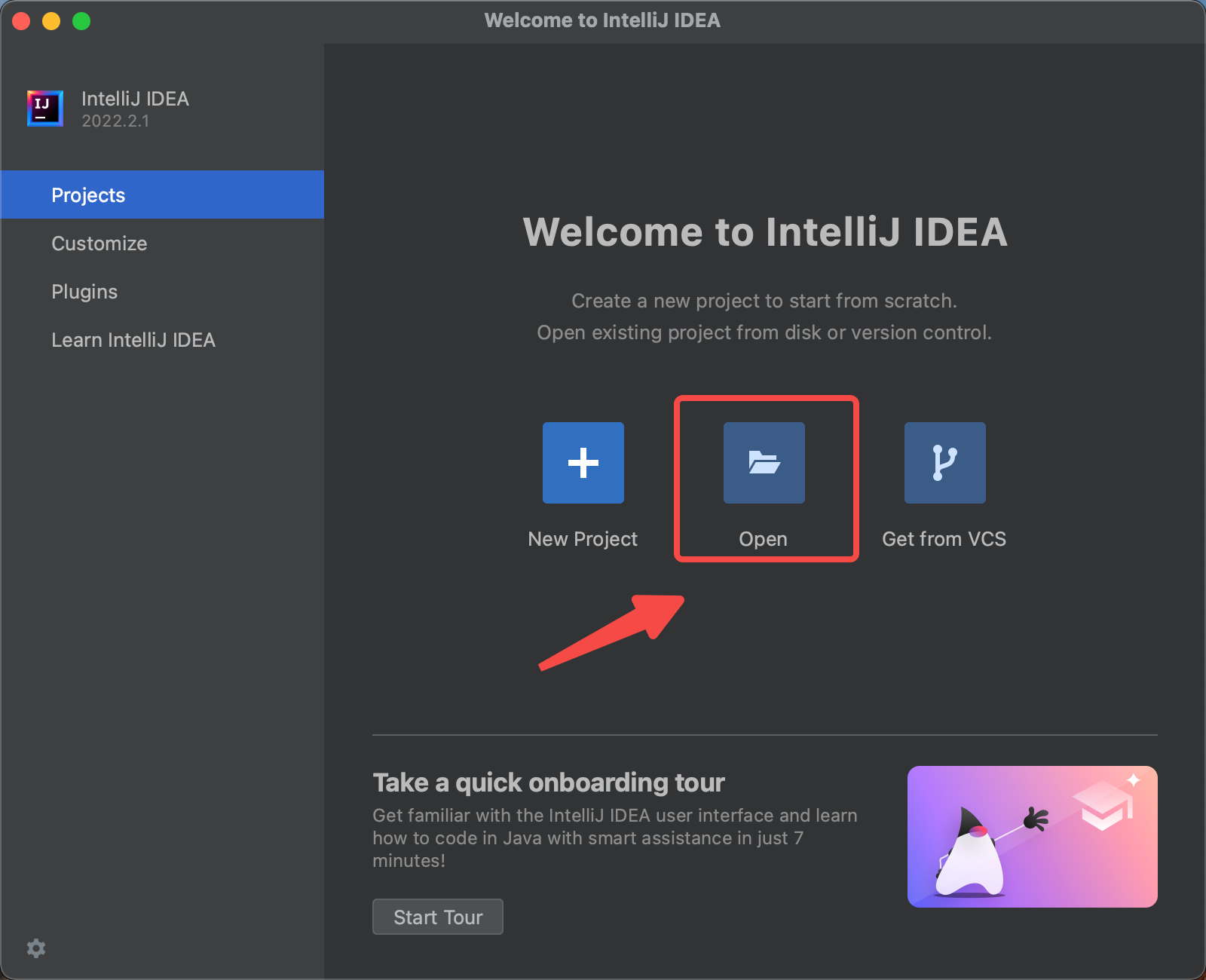

3. Install IntelliJ IDEA and build the source

Install the IDEA Community edition (the Ultimate edition is ok too).

Import the source code into IDEA. Click the Open, and choose the directory of Kylin source code.

Install the scala plugin and restart

Configure SDK(JDK and Scala), make sure you use JDK 1.8.X and Scala 2.12.X.

Reload maven projects, and the

scaladirectory will be marked as source root(in blue color).

Build the projects.(make sure you have executed

mvn clean package -DskipTests, otherwise some source code is not generated by maven javacc plugin)

Step 4: Prepare IDEA configuration

Download spark and create running IDEA configuration for debug purpose and initialize the frontend env.

./dev-support/local/local.sh init

Check status of Require services

- Check health of Zookeeper, you can use following command to check status

./dev-support/local/local.sh psDebug Kylin in IDEA

Start backend in IDEA

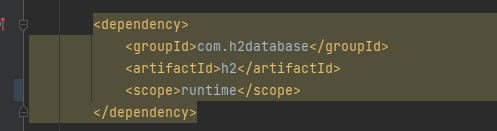

Alter the scope of dependency

com.h2databasein modulekylin-serverfromtesttoruntime.

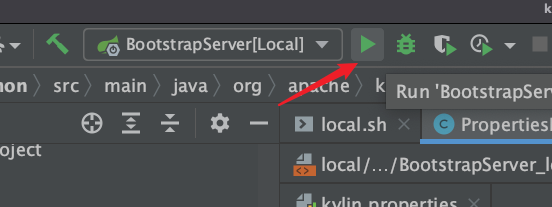

- Select “BootstrapServer[Local]” on top of IDEA and click Run .

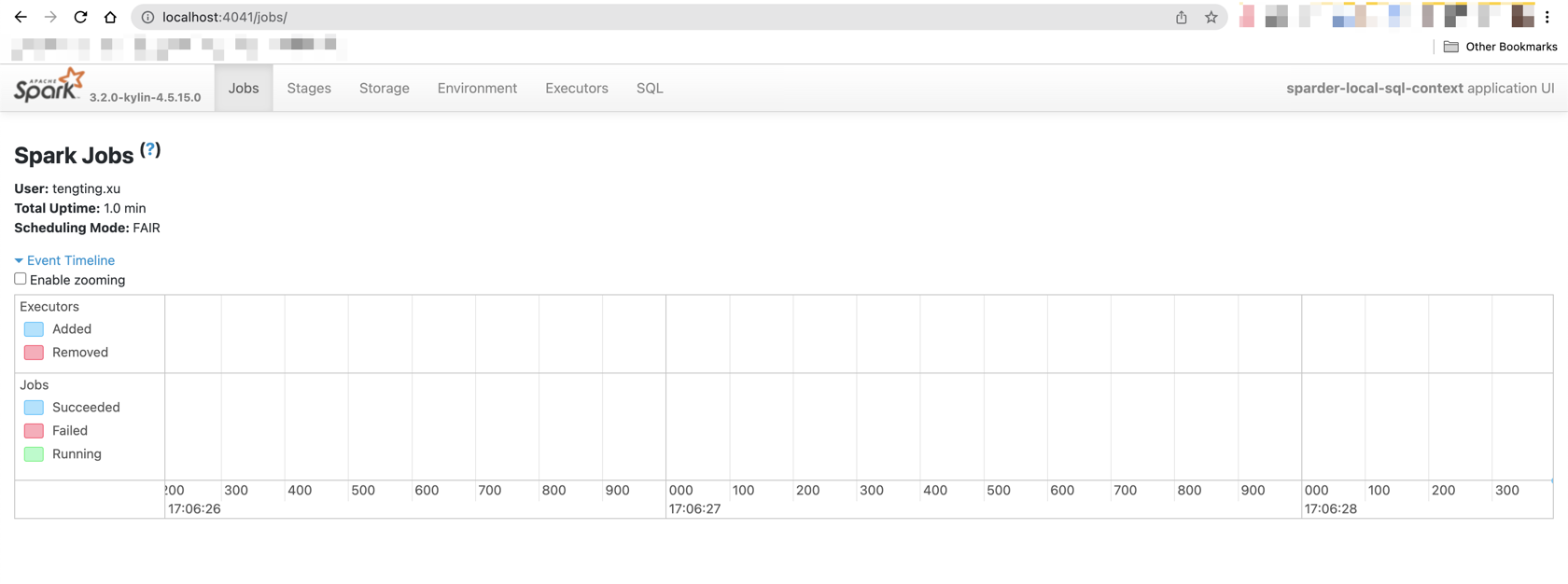

Check if SparkUI of Sparder is started.

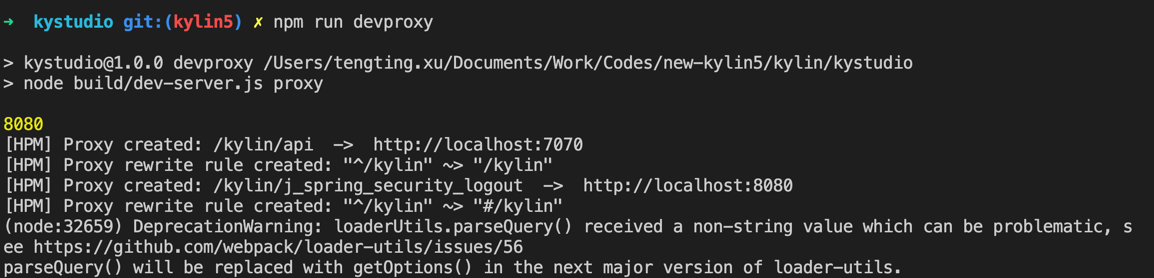

Start frontend in IDEA

- Set up dev proxy

cd kystudio npm run devproxy

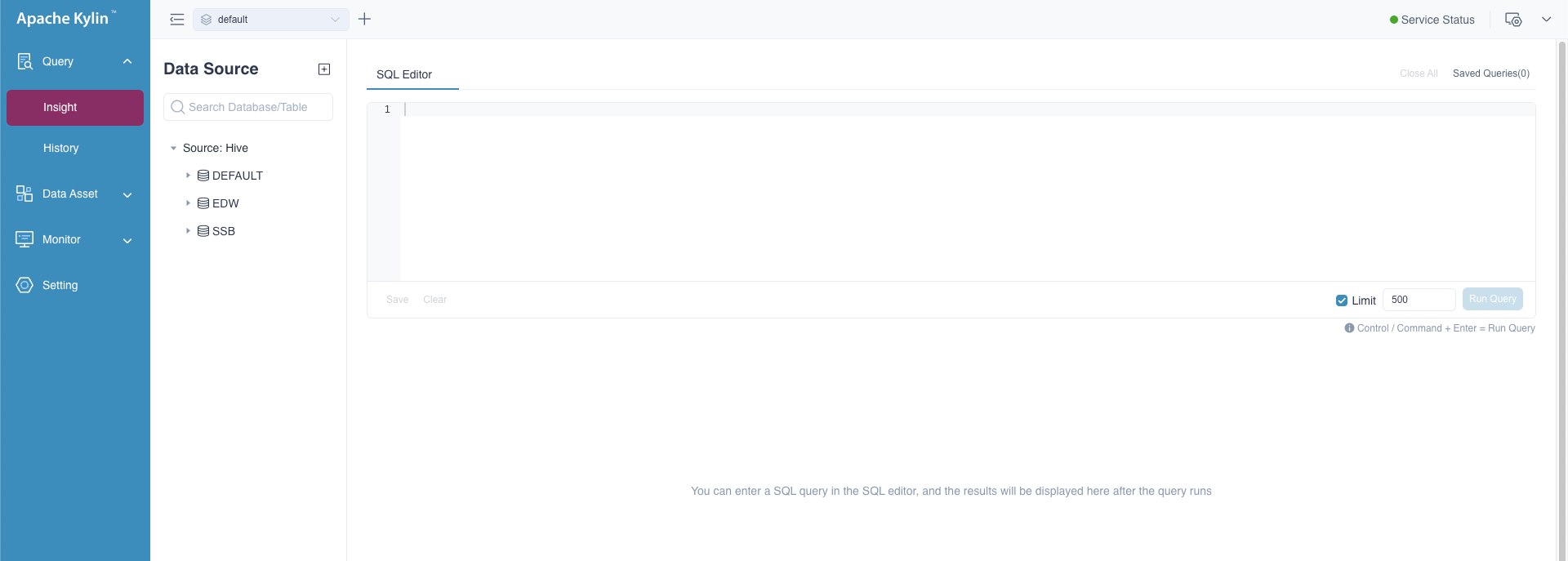

Validate Kylin’s core functions

- Visit Kylin WEB UI in your laptop

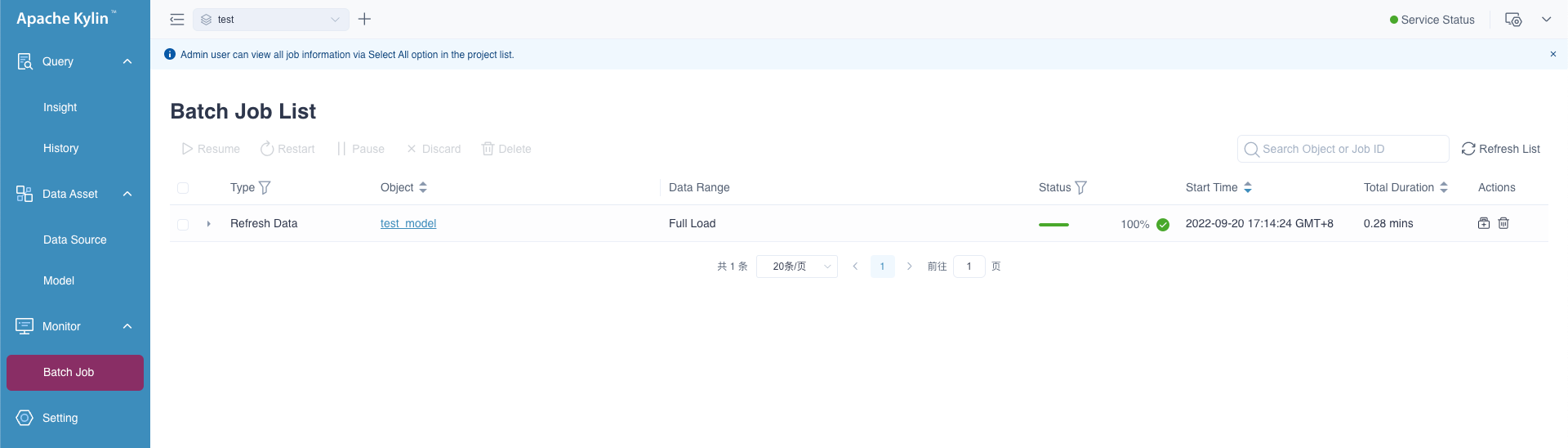

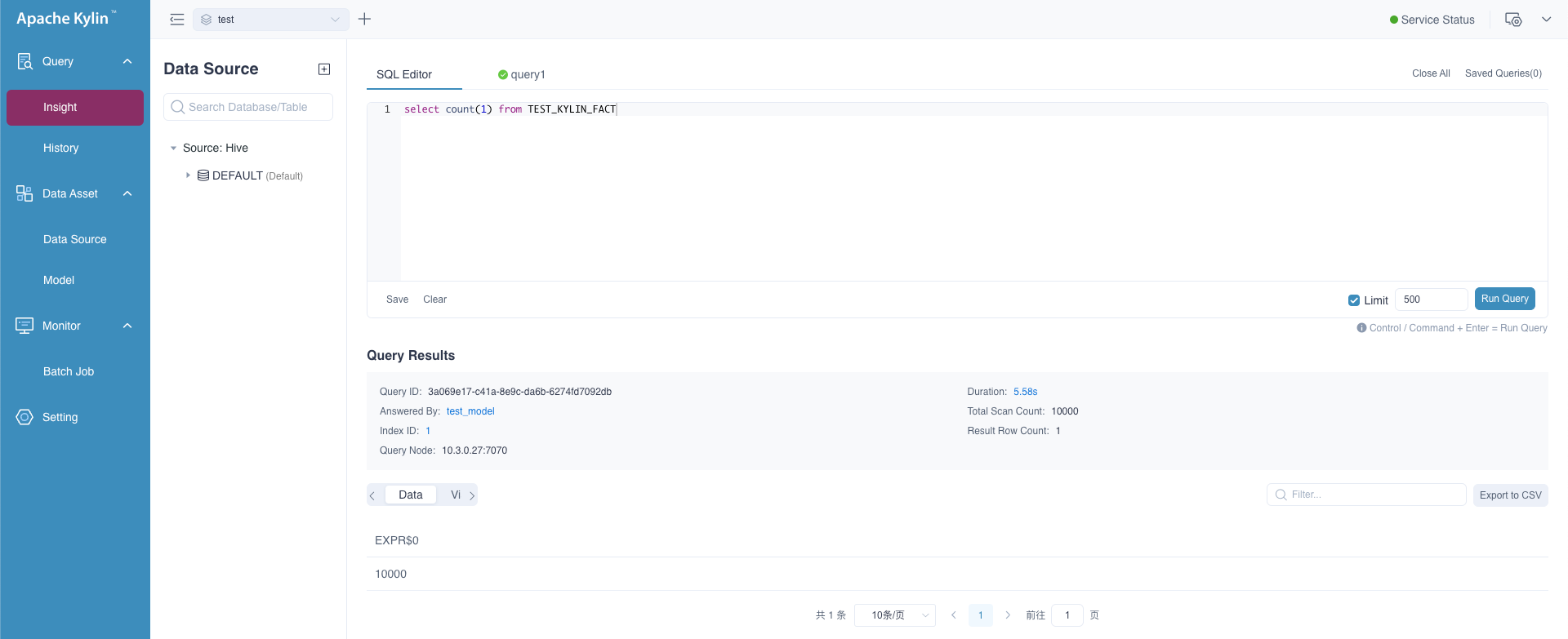

Create a new project, load table and create model

Validate Cube Build and Query function

Command manual

- Use

./dev-support/local/local.sh stopto stop all containers - Use

./dev-support/local/local.sh startto start all containers - Use

./dev-support/local/local.sh psto check status of all containers - Use

./dev-support/local/local.sh downto stop all containers and delete them